目录

1.应用场景

2.卷积神经网络结构

2.1 卷积(convelution)

2.2 Relu激活函数

2.3 池化(pool)

2.4 全连接(full connection)

2.5 损失函数(softmax_loss)

2.6 前向传播(forward propagation)

2.7 反向传播(backford propagation)

2.8 随机梯度下降(sgd_momentum)

3.代码实现流程图以及介绍

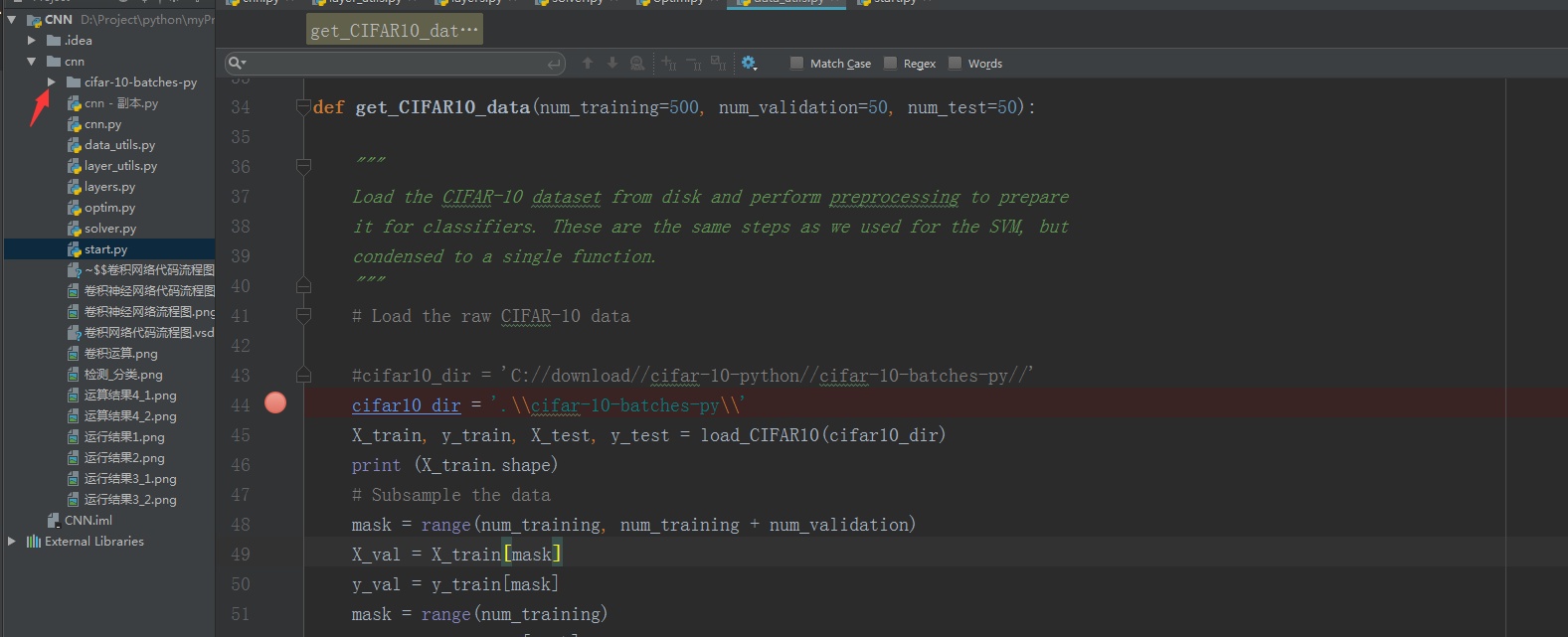

4.代码实现(python3.6)

5.运行结果以及分析

6.参考文献

# -*- coding: utf-8 -*-

try:

from . import layers

except Exception:

import layers

def affine_relu_forward(x, w, b):

"""

Convenience layer that perorms an affine transform followed by a ReLU

Inputs:

-x: Input to the affine layer

-w, b: Weights for the affine layer

Returns a tuple of:

-out: Output from the ReLU

-cache: Object to give to the backward pass

"""

a,fc_cache = layers.affine_forward(x, w, b)

out, relu_cache = layers.relu_forward(a)

cache = (fc_cache, relu_cache)

return out, cache

def affine_relu_backward(dout, cache):

"""

Backward pass for the affine-relu convenience layer

"""

fc_cache, relu_cache = cache

da= layers.relu_backward(dout, relu_cache)

dx,dw, db = layers.affine_backward(da, fc_cache)

return dx, dw, db

pass

def conv_relu_forward(x, w, b, conv_param):

"""

Aconvenience layer that performs a convolution followed by a ReLU.

Inputs:

-x: Input to the convolutional layer

-w, b, conv_param: Weights and parameters for the convolutional layer

Returns a tuple of:

-out: Output from the ReLU

-cache: Object to give to the backward pass

"""

a,conv_cache = layers.conv_forward_fast(x, w, b, conv_param)

out, relu_cache = layers.relu_forward(a)

cache = (conv_cache, relu_cache)

return out, cache

def conv_relu_backward(dout, cache):

"""

Backward pass for the conv-relu convenience layer.

"""

conv_cache, relu_cache = cache

da= layers.relu_backward(dout, relu_cache)

dx,dw, db = layers.conv_backward_fast(da, conv_cache)

return dx, dw, db

def conv_relu_pool_forward(x, w, b,conv_param, pool_param):

"""

Convenience layer that performs a convolution, a ReLU, and a pool.

Inputs:

-x: Input to the convolutional layer

-w, b, conv_param: Weights and parameters for the convolutional layer

-pool_param: Parameters for the pooling layer

Returns a tuple of:

-out: Output from the pooling layer

-cache: Object to give to the backward pass

"""

a,conv_cache = layers.conv_forward_naive(x, w, b, conv_param)

s,relu_cache = layers.relu_forward(a)

out, pool_cache = layers.max_pool_forward_naive(s, pool_param)

cache = (conv_cache, relu_cache, pool_cache)

return out, cache

def conv_relu_pool_backward(dout, cache):

"""

Backward pass for the conv-relu-pool convenience layer

"""

conv_cache, relu_cache, pool_cache = cache

ds= layers.max_pool_backward_naive(dout, pool_cache)

da= layers.relu_backward(ds, relu_cache)

dx,dw, db = layers.conv_backward_naive(da, conv_cache)

return dx, dw, db

layers.py

import numpy as np

'''

暂无评论